首页 > 基础知识 > 正文

原创文章,转载请注明出处!

本文链接:http://daiwk.github.io/posts/knowledge-matrix.html

机器学习中的矩阵、向量求导

标签:机器学习中的矩阵、向量求导

1970-01-01

目录

数学基础

https://www.cis.upenn.edu/~jean/math-deep.pdf

矩阵、向量求导

常用梯度

交叉熵

mf1_v = [0.2, 0.2, 0.3]

mf2_v = [0.1, 0.2, 0.3]

lr1_v = [0.1]#, 0.2, 0.3]

lr2_v = [0.1]#, 0.2, 0.3]

bias_v = [0.1]#, 0.1, 0.2]

mf1 = Variable(torch.Tensor(mf1_v), requires_grad=True)

mf2 = Variable(torch.Tensor(mf2_v), requires_grad=True)

lr1 = Variable(torch.Tensor(lr1_v), requires_grad=False)

lr2 = Variable(torch.Tensor(lr2_v), requires_grad=False)

bias = Variable(torch.Tensor(bias_v), requires_grad=False)

ss = torch.sigmoid(mf1.dot(mf2) + lr1 + lr2 + bias)

ss.backward()

print "torch.sigmoid(mf1.dot(mf2)+ lr1 + lr2) GRAD:", mf1.grad

print "Check torch.sigmoid(mf1.dot(mf2) + lr1 + lr2) GRAD:", ss * (1 - ss) * mf2

mf1.grad.data.zero_()

print mf1.grad

然后看看正例和负例的loss的梯度:

## z = wx+b

## cross-entropy loss = -(y*log(sigmoid(z)) + (1-y)*log(1-sigmoid(z)))

## cross-entropy loss's w gradient: x(sigmoid(z)-y)

## cross-entropy loss's b gradient: (sigmoid(z)-y)

ss = torch.sigmoid(mf1.dot(mf2) + lr1 + lr2 + bias)

positive_loss = -torch.log(ss)

positive_loss.backward()

print "positive loss mf1 GRAD:", mf1.grad

print "Check positive loss mf1 GRAD:", mf2 * (ss - 1) # y=1

print "positive loss mf2 GRAD:", mf2.grad

print "Check positive loss mf2 GRAD:", mf1 * (ss - 1) # y=1

mf1.grad.data.zero_()

mf2.grad.data.zero_()

print mf1.grad

print mf2.grad

ss2 = torch.sigmoid(mf1.dot(mf2) + lr1 + lr2 + bias)

negative_loss = -torch.log(1-ss2)

negative_loss.backward()

print "negative loss mf1 GRAD:", mf1.grad

print "Check negative loss mf1 GRAD:", mf2 * (ss) # y=0

print "negative loss mf2 GRAD:", mf2.grad

print "Check negative loss mf2 GRAD:", mf1 * (ss) # y=0

mf1.grad.data.zero_()

mf2.grad.data.zero_()

print mf1.grad

print mf2.grad

举例

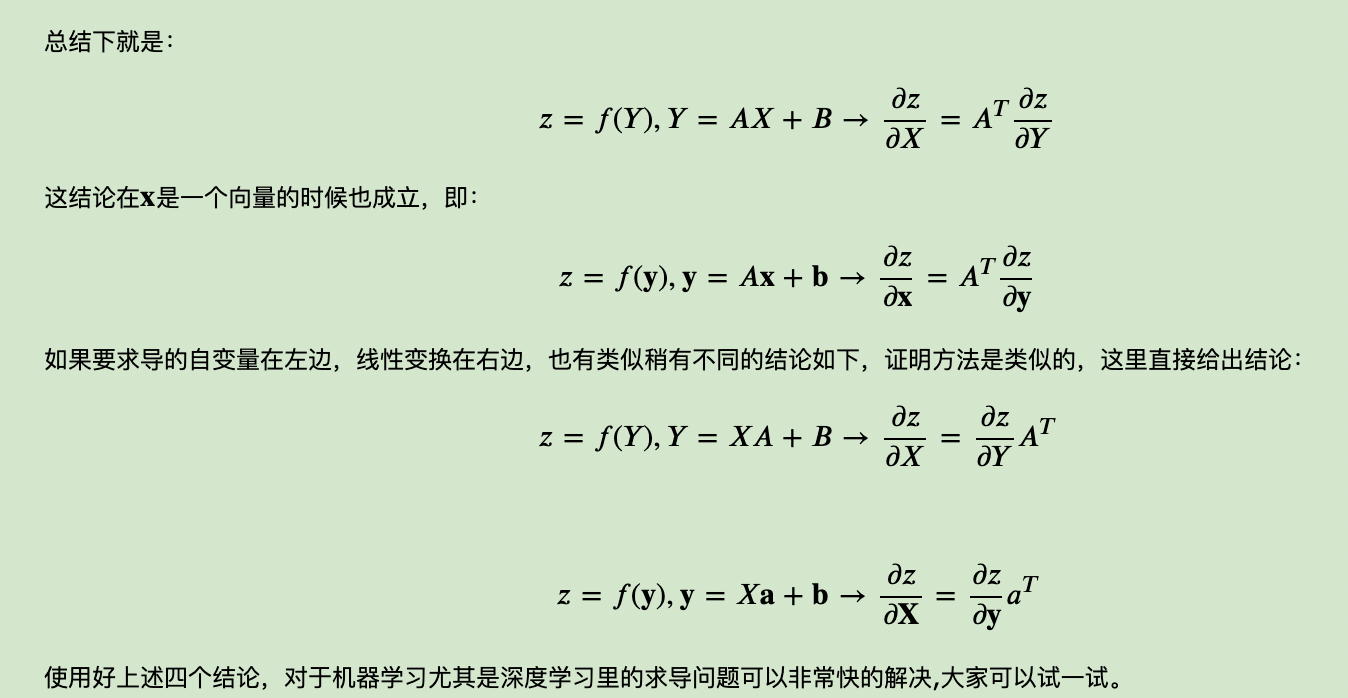

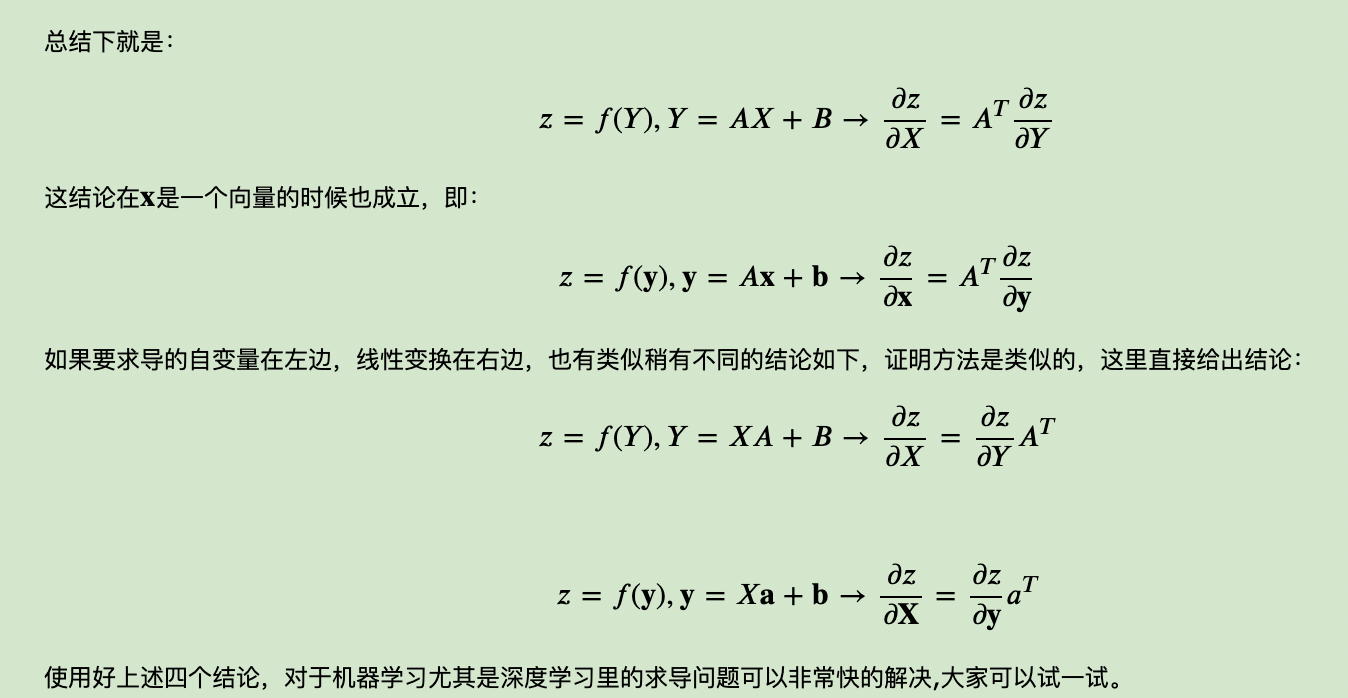

A是m*n,X是n*k,AX是m*k

Y=reduce_sum(AX)对X的导数就包括

- reduce_sum对AX的导数:一个全1的m*k的矩阵

- AX对X的导数:A^T,n*m

参考https://www.cnblogs.com/pinard/p/10825264.html

所以这个就是 A^T * 全1矩阵,也就是n*m和m*k相乘,得到的n*k,和原来的X一样的维度

原创文章,转载请注明出处!

本文链接:http://daiwk.github.io/posts/knowledge-matrix.html

上篇:

matplotlib

下篇:

mmr

comment here..